Publications

2024

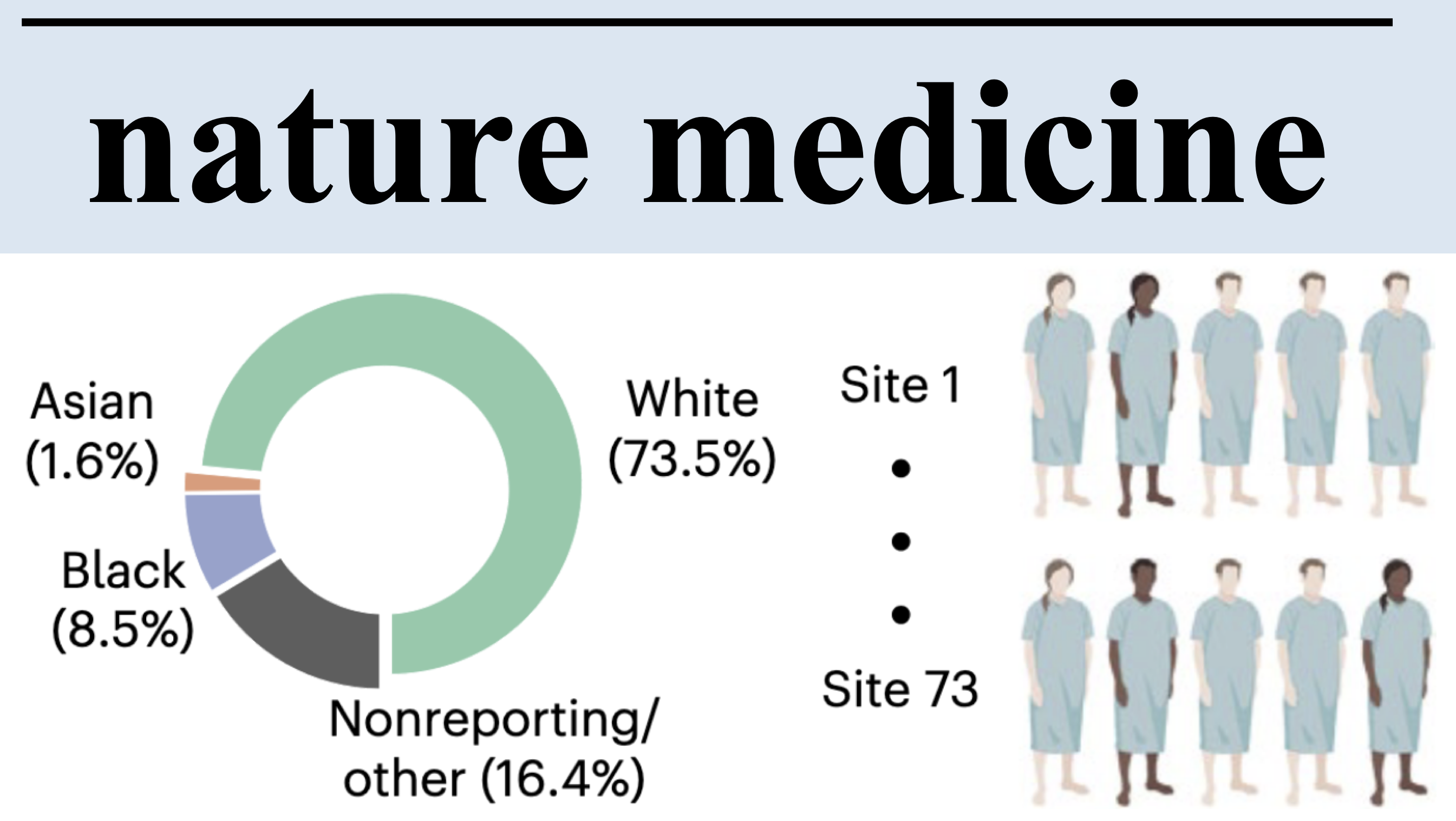

Demographic bias in misdiagnosis by computational pathology modelsAnurag Vaidya, Richard J Chen, Drew FK Williamson, Andrew H Song, Guillaume Jaume, Yuzhe Yang, Thomas Hartvigsen, Emma C Dyer, Ming Y Lu, Jana Lipkova, and othersNature Medicine[ bibtex | html | abstract ]

Demographic bias in misdiagnosis by computational pathology modelsAnurag Vaidya, Richard J Chen, Drew FK Williamson, Andrew H Song, Guillaume Jaume, Yuzhe Yang, Thomas Hartvigsen, Emma C Dyer, Ming Y Lu, Jana Lipkova, and othersNature Medicine[ bibtex | html | abstract ]@article{vaidya2024demographic, title = {Demographic bias in misdiagnosis by computational pathology models}, author = {Vaidya, Anurag and Chen, Richard J and Williamson, Drew FK and Song, Andrew H and Jaume, Guillaume and Yang, Yuzhe and Hartvigsen, Thomas and Dyer, Emma C and Lu, Ming Y and Lipkova, Jana and others}, journal = {Nature Medicine}, volume = {30}, number = {4}, pages = {1174--1190}, year = {2024}, publisher = {Nature Publishing Group US New York}, html = {https://www.nature.com/articles/s41591-024-02885-z}, abbr = {vaidya2024demographic.png} }Despite increasing numbers of regulatory approvals, deep learning-based computational pathology systems often overlook the impact of demographic factors on performance, potentially leading to biases. This concern is all the more important as computational pathology has leveraged large public datasets that underrepresent certain demographic groups. Using publicly available data from The Cancer Genome Atlas and the EBRAINS brain tumor atlas, as well as internal patient data, we show that whole-slide image classification models display marked performance disparities across different demographic groups when used to subtype breast and lung carcinomas and to predict IDH1 mutations in gliomas. For example, when using common modeling approaches, we observed performance gaps (in area under the receiver operating characteristic curve) between white and Black patients of 3.0% for breast cancer subtyping, 10.9% for lung cancer subtyping and 16.0% for IDH1 mutation prediction in gliomas. We found that richer feature representations obtained from self-supervised vision foundation models reduce performance variations between groups. These representations provide improvements upon weaker models even when those weaker models are combined with state-of-the-art bias mitigation strategies and modeling choices. Nevertheless, self-supervised vision foundation models do not fully eliminate these discrepancies, highlighting the continuing need for bias mitigation efforts in computational pathology. Finally, we demonstrate that our results extend to other demographic factors beyond patient race. Given these findings, we encourage regulatory and policy agencies to integrate demographic-stratified evaluation into their assessment guidelines.

The age of foundation modelsJana Lipkova, and Jakob Nikolas KatherNature Reviews Clinical Oncology[ bibtex | html | abstract ]

The age of foundation modelsJana Lipkova, and Jakob Nikolas KatherNature Reviews Clinical Oncology[ bibtex | html | abstract ]@article{lipkova2024age, title = {The age of foundation models}, author = {Lipkova, Jana and Kather, Jakob Nikolas}, journal = {Nature Reviews Clinical Oncology}, pages = {1--2}, year = {2024}, publisher = {Nature Publishing Group UK London}, html = {https://www.nature.com/articles/s41571-024-00941-8}, abbr = {lipkova2024age.png} }The development of clinically relevant artificial intelligence (AI) models has traditionally required access to extensive labelled datasets, which inevitably centre AI advances around large centres and private corporations. Data availability has also dictated the development of AI applications: most studies focus on common cancer types, and leave rare diseases behind. However, this paradigm is changing with the advent of foundation models, which enable the training of more powerful and robust AI systems using much smaller datasets.

2023

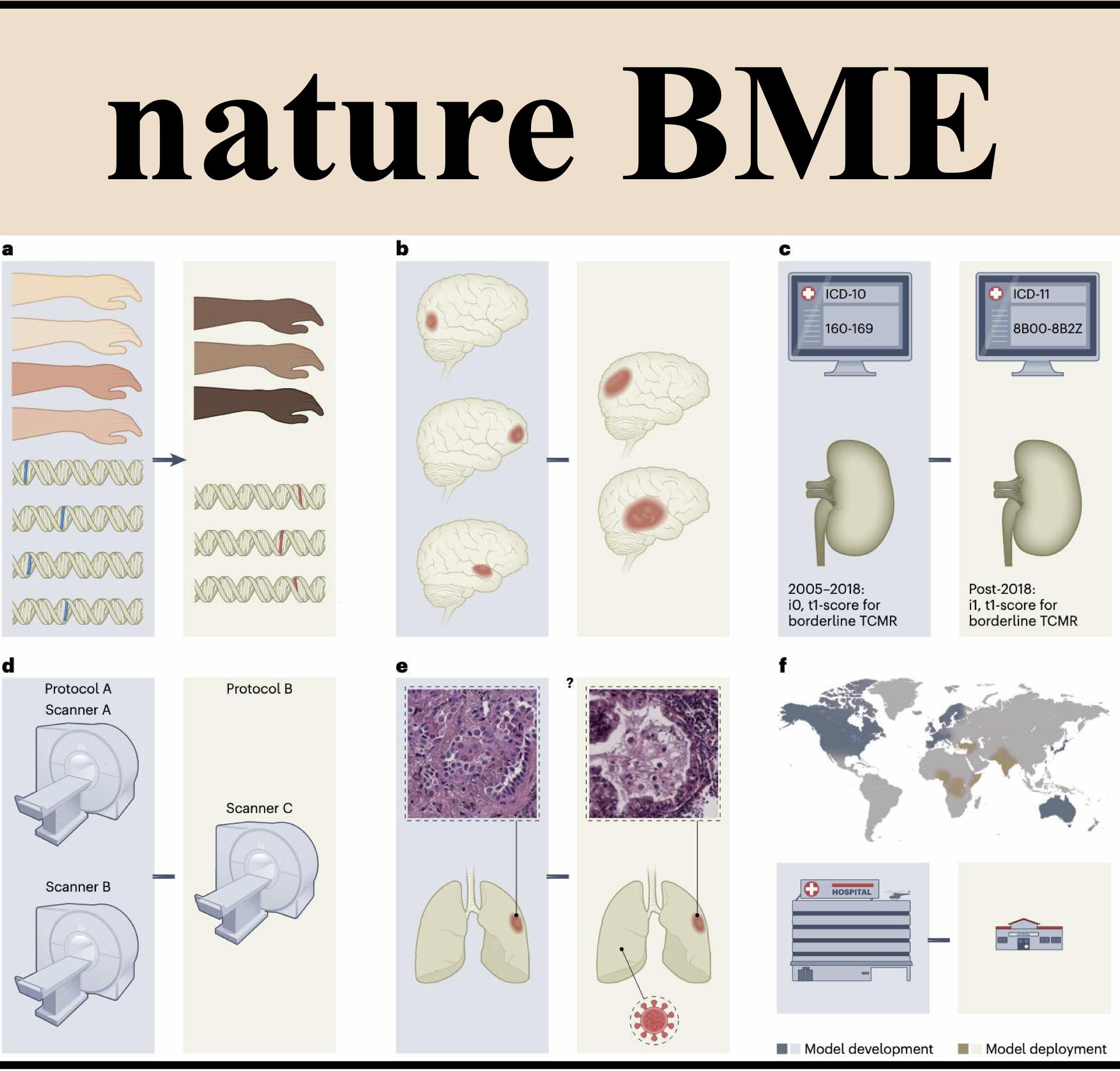

Algorithmic fairness in artificial intelligence for medicine and healthcareRichard J Chen, Judy J Wang, Drew FK Williamson, Tiffany Y Chen, Jana Lipkova, Ming Y Lu, Sharifa Sahai, and Faisal MahmoodNature Biomedical Engineering[ bibtex | html | abstract ]

Algorithmic fairness in artificial intelligence for medicine and healthcareRichard J Chen, Judy J Wang, Drew FK Williamson, Tiffany Y Chen, Jana Lipkova, Ming Y Lu, Sharifa Sahai, and Faisal MahmoodNature Biomedical Engineering[ bibtex | html | abstract ]@article{chen2023algorithmic, title = {Algorithmic fairness in artificial intelligence for medicine and healthcare}, author = {Chen, Richard J and Wang, Judy J and Williamson, Drew FK and Chen, Tiffany Y and Lipkova, Jana and Lu, Ming Y and Sahai, Sharifa and Mahmood, Faisal}, journal = {Nature Biomedical Engineering}, volume = {7}, number = {6}, pages = {719--742}, year = {2023}, publisher = {Nature Publishing Group UK London}, html = {https://doi.org/10.1038/s41551-023-01056-8}, abbr = {chen2023algorithmic.png} }In healthcare, the development and deployment of insufficiently fair systems of artificial intelligence (AI) can undermine the delivery of equitable care. Assessments of AI models stratified across subpopulations have revealed inequalities in how patients are diagnosed, treated and billed. In this Perspective, we outline fairness in machine learning through the lens of healthcare, and discuss how algorithmic biases (in data acquisition, genetic variation and intra-observer labelling variability, in particular) arise in clinical workflows and the resulting healthcare disparities. We also review emerging technology for mitigating biases via disentanglement, federated learning and model explainability, and their role in the development of AI-based software as a medical device.

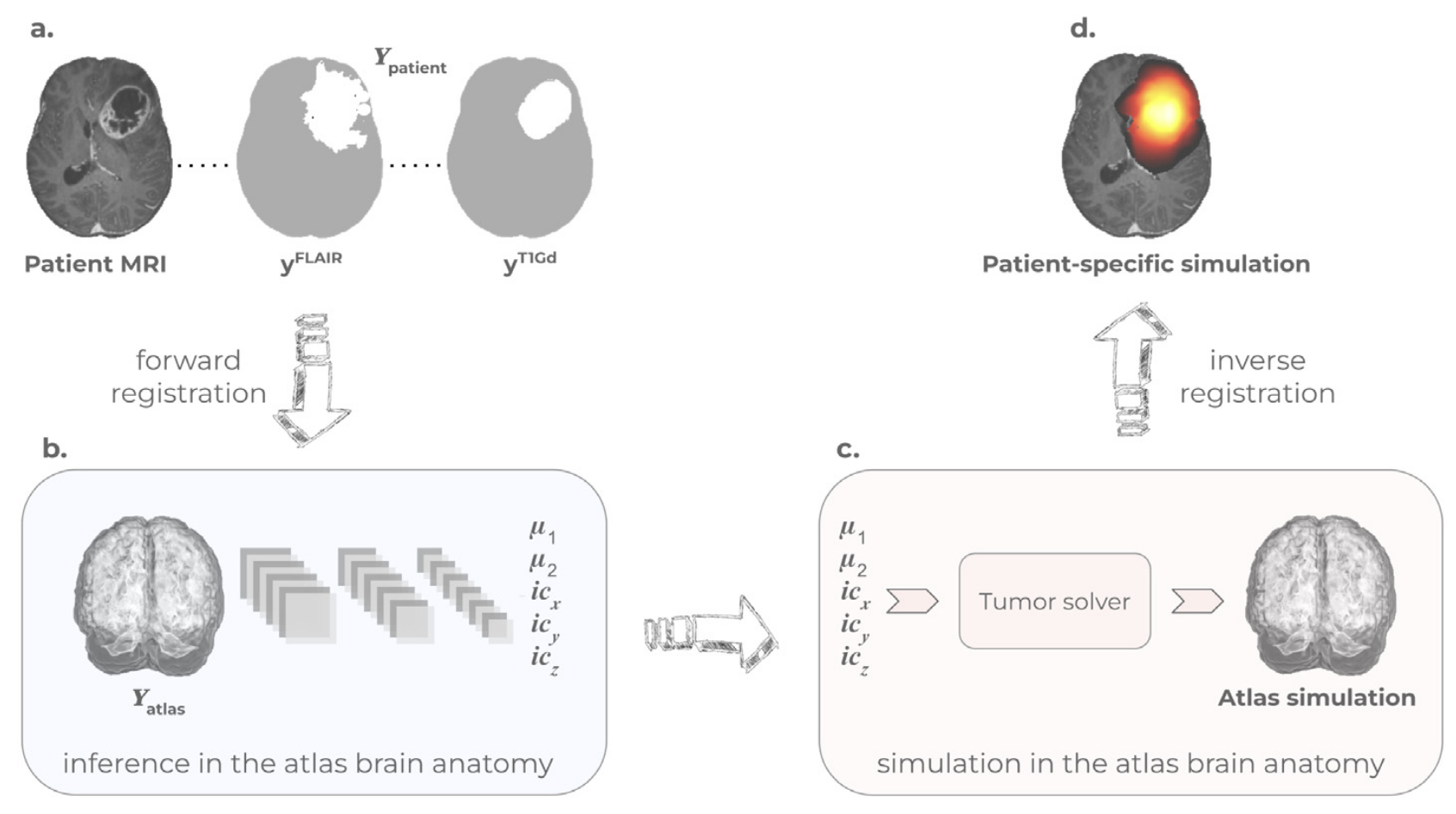

Learn-Morph-Infer: a new way of solving the inverse problem for brain tumor modelingIvan Ezhov, Kevin Scibilia, Katharina Franitza, Felix Steinbauer, Suprosanna Shit, Lucas Zimmer, Jana Lipkova, Florian Kofler, Johannes C Paetzold, Luca Canalini, and othersMedical Image Analysis[ bibtex | html | abstract ]

Learn-Morph-Infer: a new way of solving the inverse problem for brain tumor modelingIvan Ezhov, Kevin Scibilia, Katharina Franitza, Felix Steinbauer, Suprosanna Shit, Lucas Zimmer, Jana Lipkova, Florian Kofler, Johannes C Paetzold, Luca Canalini, and othersMedical Image Analysis[ bibtex | html | abstract ]@article{ezhov2023learn, title = {Learn-Morph-Infer: a new way of solving the inverse problem for brain tumor modeling}, author = {Ezhov, Ivan and Scibilia, Kevin and Franitza, Katharina and Steinbauer, Felix and Shit, Suprosanna and Zimmer, Lucas and Lipkova, Jana and Kofler, Florian and Paetzold, Johannes C and Canalini, Luca and others}, journal = {Medical Image Analysis}, volume = {83}, pages = {102672}, year = {2023}, publisher = {Elsevier}, html = {https://www.sciencedirect.com/science/article/pii/S1361841522003000}, abbr = {ezhov2023learn.png} }Current treatment planning of patients diagnosed with a brain tumor, such as glioma, could significantly benefit by accessing the spatial distribution of tumor cell concentration. Existing diagnostic modalities, e.g. magnetic resonance imaging (MRI), contrast sufficiently well areas of high cell density. In gliomas, however, they do not portray areas of low cell concentration, which can often serve as a source for the secondary appearance of the tumor after treatment. To estimate tumor cell densities beyond the visible boundaries of the lesion,numerical simulations of tumor growth could complement imaging information by providing estimates of full spatial distributions of tumor cells. Over recent years a corpus of literature on medical image-based tumor modeling was published. It includes different mathematical formalisms describing the forward tumor growth model. Alongside, various parametric inference schemes were developed to perform an efficient tumor model personalization, i.e. solving the inverse problem. However, the unifying drawback of all existing approaches is the time complexity of the model personalization which prohibits a potential integration of the modeling into clinical settings. In this work, we introduce a deep learning based methodology for inferring the patient-specific spatial distribution of brain tumors from T1Gd and FLAIR MRI medical scans. Coined as Learn-Morph-Infer, the method achieves real-time performance in the order of minutes on widely available hardware and the compute time is stable across tumor models of different complexity, such as reaction–diffusion and reaction– advection–diffusion models. We believe the proposed inverse solution approach not only bridges the way for clinical translation of brain tumor personalization but can also be adopted to other scientific and engineering domains.

The liver tumor segmentation benchmark (lits)Patrick Bilic, Patrick Christ, Hongwei Bran Li, Eugene Vorontsov, Avi Ben-Cohen, Georgios Kaissis, Adi Szeskin, Colin Jacobs, Gabriel Efrain Humpire Mamani, Gabriel Chartrand, and othersMedical Image Analysis[ bibtex | html | abstract ]

The liver tumor segmentation benchmark (lits)Patrick Bilic, Patrick Christ, Hongwei Bran Li, Eugene Vorontsov, Avi Ben-Cohen, Georgios Kaissis, Adi Szeskin, Colin Jacobs, Gabriel Efrain Humpire Mamani, Gabriel Chartrand, and othersMedical Image Analysis[ bibtex | html | abstract ]@article{bilic2023liver, title = {The liver tumor segmentation benchmark (lits)}, author = {Bilic, Patrick and Christ, Patrick and Li, Hongwei Bran and Vorontsov, Eugene and Ben-Cohen, Avi and Kaissis, Georgios and Szeskin, Adi and Jacobs, Colin and Mamani, Gabriel Efrain Humpire and Chartrand, Gabriel and others}, journal = {Medical Image Analysis}, volume = {84}, pages = {102680}, year = {2023}, publisher = {Elsevier}, abbr = {bilic2023liver.png}, html = {https://www.sciencedirect.com/science/article/pii/S1361841522003085} }In this work, we report the set-up and results of the Liver Tumor Segmentation Benchmark (LITS) organized in conjunction with the IEEE International Symposium on Biomedical Imaging (ISBI) 2016 and International Conference On Medical Image Computing Computer Assisted Intervention (MICCAI) 2017. Twenty four valid state-of-the-art liver and liver tumor segmentation algorithms were applied to a set of 131 computed tomography (CT) volumes with different types of tumor contrast levels (hyper-/hypo-intense), abnormalities in tissues (metastasectomie) size and varying amount of lesions. The submitted algorithms have been tested on 70 undisclosed volumes. The dataset is created in collaboration with seven hospitals and research institutions and manually reviewed by independent three radiologists. We found that not a single algorithm performed best for liver and tumors. The best liver segmentation algorithm achieved a Dice score of 0.96(MICCAI) whereas for tumor segmentation the best algorithm evaluated at 0.67(ISBI) and 0.70(MICCAI). The LITS image data and manual annotations continue to be publicly available through an online evaluation system as an ongoing benchmarking resource.

2022

Deep learning-enabled assessment of cardiac allograft rejection from endomyocardial biopsiesJana Lipkova, Tiffany Y Chen, Ming Y Lu, Richard J Chen, Maha Shady, Mane Williams, Jingwen Wang, Zahra Noor, Richard N Mitchell, Mehmet Turan, and othersNature medicine[ bibtex | software | demo | html | abstract ]

Deep learning-enabled assessment of cardiac allograft rejection from endomyocardial biopsiesJana Lipkova, Tiffany Y Chen, Ming Y Lu, Richard J Chen, Maha Shady, Mane Williams, Jingwen Wang, Zahra Noor, Richard N Mitchell, Mehmet Turan, and othersNature medicine[ bibtex | software | demo | html | abstract ]@article{lipkova2022deep, title = {Deep learning-enabled assessment of cardiac allograft rejection from endomyocardial biopsies}, author = {Lipkova, Jana and Chen, Tiffany Y and Lu, Ming Y and Chen, Richard J and Shady, Maha and Williams, Mane and Wang, Jingwen and Noor, Zahra and Mitchell, Richard N and Turan, Mehmet and others}, journal = {Nature medicine}, volume = {28}, number = {3}, pages = {575--582}, year = {2022}, publisher = {Nature Publishing Group}, html = {https://www.nature.com/articles/s41591-022-01709-2}, software = {https://github.com/mahmoodlab/CRANE}, demo = {http://crane.mahmoodlab.org/}, abbr = {lipkova2022deep.png} }Endomyocardial biopsy (EMB) screening represents the standard of care for detecting allograft rejections after heart transplant. Manual interpretation of EMBs is affected by substantial interobserver and intraobserver variability, which often leads to inappropriate treatment with immunosuppressive drugs, unnecessary follow-up biopsies and poor transplant outcomes. Here we present a deep learning-based artificial intelligence (AI) system for automated assessment of gigapixel whole-slide images obtained from EMBs, which simultaneously addresses detection, subtyping and grading of allograft rejection. To assess model performance, we curated a large dataset from the United States, as well as independent test cohorts from Turkey and Switzerland, which includes large-scale variability across populations, sample preparations and slide scanning instrumentation. The model detects allograft rejection with an area under the receiver operating characteristic curve (AUC) of 0.962; assesses the cellular and antibody-mediated rejection type with AUCs of 0.958 and 0.874, respectively; detects Quilty B lesions, benign mimics of rejection, with an AUC of 0.939; and differentiates between low-grade and high-grade rejections with an AUC of 0.833. In a human reader study, the AI system showed non-inferior performance to conventional assessment and reduced interobserver variability and assessment time. This robust evaluation of cardiac allograft rejection paves the way for clinical trials to establish the efficacy of AI-assisted EMB assessment and its potential for improving heart transplant outcomes.

Artificial intelligence for multimodal data integration in oncologyJana Lipkova, Richard J Chen, Bowen Chen, Ming Y Lu, Matteo Barbieri, Daniel Shao, Anurag J Vaidya, Chengkuan Chen, Luoting Zhuang, Drew FK Williamson, and othersCancer Cell[ bibtex | html | abstract ]

Artificial intelligence for multimodal data integration in oncologyJana Lipkova, Richard J Chen, Bowen Chen, Ming Y Lu, Matteo Barbieri, Daniel Shao, Anurag J Vaidya, Chengkuan Chen, Luoting Zhuang, Drew FK Williamson, and othersCancer Cell[ bibtex | html | abstract ]@article{lipkova2022ai, title = {Artificial intelligence for multimodal data integration in oncology}, author = {Lipkova, Jana and Chen, Richard J and Chen, Bowen and Lu, Ming Y and Barbieri, Matteo and Shao, Daniel and Vaidya, Anurag J and Chen, Chengkuan and Zhuang, Luoting and Williamson, Drew FK and others}, journal = {Cancer Cell}, volume = {40}, number = {10}, pages = {1095--1110}, year = {2022}, publisher = {Elsevier}, html = https://doi.org/10.1016/j.ccell.2022.09.012, abbr = {lipkova2022ai.png} }In oncology, the patient state is characterized by a whole spectrum of modalities, ranging from radiology, histology, and genomics to electronic health records. Current artificial intelligence (AI) models operate mainly in the realm of a single modality, neglecting the broader clinical context, which inevitably diminishes their potential. Integration of different data modalities provides opportunities to increase robustness and accuracy of diagnostic and prognostic models, bringing AI closer to clinical practice. AI models are also capable of discovering novel patterns within and across modalities suitable for explaining differences in patient outcomes or treatment resistance. The insights gleaned from such models can guide exploration studies and contribute to the discovery of novel biomarkers and therapeutic targets. To support these advances, here we present a synopsis of AI methods and strategies for multimodal data fusion and association discovery. We outline approaches for AI interpretability and directions for AI-driven exploration through multimodal data interconnections. We examine challenges in clinical adoption and discuss emerging solutions.

Pan-cancer integrative histology-genomic analysis via multimodal deep learningRichard J Chen, Ming Y Lu, Drew FK Williamson, Tiffany Y Chen, Jana Lipkova, Zahra Noor, Muhammad Shaban, Maha Shady, Mane Williams, Bumjin Joo, and othersCancer Cell[ bibtex | software | demo | html | abstract ]

Pan-cancer integrative histology-genomic analysis via multimodal deep learningRichard J Chen, Ming Y Lu, Drew FK Williamson, Tiffany Y Chen, Jana Lipkova, Zahra Noor, Muhammad Shaban, Maha Shady, Mane Williams, Bumjin Joo, and othersCancer Cell[ bibtex | software | demo | html | abstract ]@article{chen2022pan, title = {Pan-cancer integrative histology-genomic analysis via multimodal deep learning}, author = {Chen, Richard J and Lu, Ming Y and Williamson, Drew FK and Chen, Tiffany Y and Lipkova, Jana and Noor, Zahra and Shaban, Muhammad and Shady, Maha and Williams, Mane and Joo, Bumjin and others}, journal = {Cancer Cell}, volume = {40}, number = {8}, pages = {865--878}, year = {2022}, publisher = {Elsevier}, html = https://www.sciencedirect.com/science/article/pii/s1535610822003178, demo = http://pancancer.mahmoodlab.org/, software = https://github.com/mahmoodlab/porpoise, abbr = {chen2022pan.png} }Summary The rapidly emerging field of computational pathology has demonstrated promise in developing objective prognostic models from histology images. However, most prognostic models are either based on histology or genomics alone and do not address how these data sources can be integrated to develop joint image-omic prognostic models. Additionally, identifying explainable morphological and molecular descriptors from these models that govern such prognosis is of interest. We use multimodal deep learning to jointly examine pathology whole-slide images and molecular profile data from 14 cancer types. Our weakly supervised, multimodal deep-learning algorithm is able to fuse these heterogeneous modalities to predict outcomes and discover prognostic features that correlate with poor and favorable outcomes. We present all analyses for morphological and molecular correlates of patient prognosis across the 14 cancer types at both a disease and a patient level in an interactive open-access database to allow for further exploration, biomarker discovery, and feature assessment.

Modelling glioma progression, mass effect and intracranial pressure in patient anatomyJana Lipková, Bjoern Menze, Benedikt Wiestler, Petros Koumoutsakos, and John S LowengrubJournal of the Royal Society Interface[ bibtex | software | html | abstract ]

Modelling glioma progression, mass effect and intracranial pressure in patient anatomyJana Lipková, Bjoern Menze, Benedikt Wiestler, Petros Koumoutsakos, and John S LowengrubJournal of the Royal Society Interface[ bibtex | software | html | abstract ]@article{lipkova2022modelling, title = {Modelling glioma progression, mass effect and intracranial pressure in patient anatomy}, author = {Lipkov{\'a}, Jana and Menze, Bjoern and Wiestler, Benedikt and Koumoutsakos, Petros and Lowengrub, John S}, journal = {Journal of the Royal Society Interface}, volume = {19}, number = {188}, pages = {20210922}, year = {2022}, publisher = {The Royal Society}, html = {https://doi-org.ezp-prod1.hul.harvard.edu/10.1098/rsif.2021.0922}, software = {https://github.com/JanaLipkova/GliomaSolver}, abbr = {lipkova2022modelling.png} }Increased intracranial pressure is the source of most critical symptoms in patients with glioma, and often the main cause of death. Clinical interventions could benefit from non-invasive estimates of the pressure distribution in the patient’s parenchyma provided by computational models. However, existing glioma models do not simulate the pressure distribution and they rely on a large number of model parameters, which complicates their calibration from available patient data. Here we present a novel model for glioma growth, pressure distribution and corresponding brain deformation. The distinct feature of our approach is that the pressure is directly derived from tumour dynamics and patient-specific anatomy, providing non-invasive insights into the patient’s state. The model predictions allow estimation of critical conditions such as intracranial hypertension, brain midline shift or neurological and cognitive impairments. A diffuse-domain formalism is employed to allow for efficient numerical implementation of the model in the patient-specific brain anatomy. The model is tested on synthetic and clinical cases. To facilitate clinical deployment, a high-performance computing implementation of the model has been publicly released.

Federated learning for computational pathology on gigapixel whole slide imagesMing Y Lu, Richard J Chen, Dehan Kong, Jana Lipkova, Rajendra Singh, Drew FK Williamson, Tiffany Y Chen, and Faisal MahmoodMedical image analysis[ bibtex | software | html | arXiv | abstract ]

Federated learning for computational pathology on gigapixel whole slide imagesMing Y Lu, Richard J Chen, Dehan Kong, Jana Lipkova, Rajendra Singh, Drew FK Williamson, Tiffany Y Chen, and Faisal MahmoodMedical image analysis[ bibtex | software | html | arXiv | abstract ]@article{lu2022federated, title = {Federated learning for computational pathology on gigapixel whole slide images}, author = {Lu, Ming Y and Chen, Richard J and Kong, Dehan and Lipkova, Jana and Singh, Rajendra and Williamson, Drew FK and Chen, Tiffany Y and Mahmood, Faisal}, journal = {Medical image analysis}, volume = {76}, pages = {102298}, year = {2022}, publisher = {Elsevier}, html = {https://www.sciencedirect.com/science/article/pii/S1361841521003431}, software = {https://github.com/mahmoodlab/HistoFL}, arxiv = {https://arxiv.org/abs/2009.10190}, abbr = {lu2022federated.png} }Deep Learning-based computational pathology algorithms have demonstrated profound ability to excel in a wide array of tasks that range from characterization of well known morphological phenotypes to predicting non-human-identifiable features from histology such as molecular alterations. However, the development of robust, adaptable, and accurate deep learning-based models often rely on the collection and time-costly curation large high-quality annotated training data that should ideally come from diverse sources and patient populations to cater for the heterogeneity that exists in such datasets. Multi-centric and collaborative integration of medical data across multiple institutions can naturally help overcome this challenge and boost the model performance but is limited by privacy concerns amongst other difficulties that may arise in the complex data sharing process as models scale towards using hundreds of thousands of gigapixel whole slide images. In this paper, we introduce privacy-preserving federated learning for gigapixel whole slide images in computational pathology using weakly-supervised attention multiple instance learning and differential privacy. We evaluated our approach on two different diagnostic problems using thousands of histology whole slide images with only slide-level labels. Additionally, we present a weakly-supervised learning framework for survival prediction and patient stratification from whole slide images and demonstrate its effectiveness in a federated setting. Our results show that using federated learning, we can effectively develop accurate weakly supervised deep learning models from distributed data silos without direct data sharing and its associated complexities, while also preserving differential privacy using randomized noise generation.

2021

AI-based pathology predicts origins for cancers of unknown primaryMing Y Lu, Tiffany Y Chen, Drew FK Williamson, Melissa Zhao, Maha Shady, Jana Lipkova, and Faisal MahmoodNature[ bibtex | software | demo | html | abstract ]

AI-based pathology predicts origins for cancers of unknown primaryMing Y Lu, Tiffany Y Chen, Drew FK Williamson, Melissa Zhao, Maha Shady, Jana Lipkova, and Faisal MahmoodNature[ bibtex | software | demo | html | abstract ]@article{lu2021ai, title = {AI-based pathology predicts origins for cancers of unknown primary}, author = {Lu, Ming Y and Chen, Tiffany Y and Williamson, Drew FK and Zhao, Melissa and Shady, Maha and Lipkova, Jana and Mahmood, Faisal}, journal = {Nature}, volume = {594}, number = {7861}, pages = {106--110}, year = {2021}, publisher = {Nature Publishing Group}, html = {https://dx.doi.org/10.1038/s41586-021-03512-4}, software = {https://github.com/mahmoodlab/TOAD}, demo = {http://clam.mahmoodlab.org/}, abbr = {lu2021ai.jpg} }Cancer of unknown primary (CUP) origin is an enigmatic group of diagnoses in which the primary anatomical site of tumour origin cannot be determined. This poses a considerable challenge, as modern therapeutics are predominantly specific to the primary tumour. Recent research has focused on using genomics and transcriptomics to identify the origin of a tumour. However, genomic testing is not always performed and lacks clinical penetration in low-resource settings. Here, to overcome these challenges, we present a deep-learning-based algorithm—Tumour Origin Assessment via Deep Learning (TOAD)—that can provide a differential diagnosis for the origin of the primary tumour using routinely acquired histology slides. We used whole-slide images of tumours with known primary origins to train a model that simultaneously identifies the tumour as primary or metastatic and predicts its site of origin. On our held-out test set of tumours with known primary origins, the model achieved a top-1 accuracy of 0.83 and a top-3 accuracy of 0.96, whereas on our external test set it achieved top-1 and top-3 accuracies of 0.80 and 0.93, respectively. We further curated a dataset of 317 cases of CUP for which a differential diagnosis was assigned. Our model predictions resulted in concordance for 61% of cases and a top-3 agreement of 82%. TOAD can be used as an assistive tool to assign a differential diagnosis to complicated cases of metastatic tumours and CUPs and could be used in conjunction with or in lieu of ancillary tests and extensive diagnostic work-ups to reduce the occurrence of CUP.

Algorithm fairness in ai for medicine and healthcareRichard J Chen, Tiffany Y Chen, Jana Lipkova, Judy J Wang, Drew FK Williamson, Ming Y Lu, Sharifa Sahai, and Faisal MahmoodarXiv preprint arXiv:2110.00603[ bibtex | html | abstract ]

Algorithm fairness in ai for medicine and healthcareRichard J Chen, Tiffany Y Chen, Jana Lipkova, Judy J Wang, Drew FK Williamson, Ming Y Lu, Sharifa Sahai, and Faisal MahmoodarXiv preprint arXiv:2110.00603[ bibtex | html | abstract ]@article{chen2021algorithm, title = {Algorithm fairness in ai for medicine and healthcare}, author = {Chen, Richard J and Chen, Tiffany Y and Lipkova, Jana and Wang, Judy J and Williamson, Drew FK and Lu, Ming Y and Sahai, Sharifa and Mahmood, Faisal}, journal = {arXiv preprint arXiv:2110.00603}, year = {2021}, abbr = {chen2021algorithm.png}, html = {http://arxiv.org/abs/2110.00603} }In the current development and deployment of many artificial intelligence (AI) systems in healthcare, algorithm fairness is a challenging problem in delivering equitable care. Recent evaluation of AI models stratified across race sub-populations have revealed enormous inequalities in how patients are diagnosed, given treatments, and billed for healthcare costs. In this perspective article, we summarize the intersectional field of fairness in machine learning through the context of current issues in healthcare, outline how algorithmic biases (e.g. - image acquisition, genetic variation, intra-observer labeling variability) arise in current clinical workflows and their resulting healthcare disparities. Lastly, we also review emerging strategies for mitigating bias via decentralized learning, disentanglement, and model explainability.

2020

CXCR4-targeted PET imaging of central nervous system B-cell lymphomaPeter Herhaus, Jana Lipkova, Felicitas Lammer, Igor Yakushev, Tibor Vag, Julia Slotta-Huspenina, Stefan Habringer, Constantin Lapa, Tobias Pukrop, Dirk Hellwig, and othersJournal of Nuclear Medicine[ bibtex | html | abstract ]

CXCR4-targeted PET imaging of central nervous system B-cell lymphomaPeter Herhaus, Jana Lipkova, Felicitas Lammer, Igor Yakushev, Tibor Vag, Julia Slotta-Huspenina, Stefan Habringer, Constantin Lapa, Tobias Pukrop, Dirk Hellwig, and othersJournal of Nuclear Medicine[ bibtex | html | abstract ]@article{herhaus2020cxcr4, title = {CXCR4-targeted PET imaging of central nervous system B-cell lymphoma}, author = {Herhaus, Peter and Lipkova, Jana and Lammer, Felicitas and Yakushev, Igor and Vag, Tibor and Slotta-Huspenina, Julia and Habringer, Stefan and Lapa, Constantin and Pukrop, Tobias and Hellwig, Dirk and others}, journal = {Journal of Nuclear Medicine}, volume = {61}, number = {12}, pages = {1765--1771}, year = {2020}, publisher = {Society of Nuclear Medicine}, html = {https://jnm.snmjournals.org/content/61/12/1765.short}, abbr = {herhaus2020cxcr4.png} }C-X-C chemokine receptor 4 (CXCR4) is a transmembrane chemokine receptor involved in growth, survival, and dissemination of cancer, including aggressive B-cell lymphoma. MRI is the standard imaging technology for central nervous system (CNS) involvement of B-cell lymphoma and provides high sensitivity but moderate specificity. Therefore, novel molecular and functional imaging strategies are urgently required.

Predicting glioblastoma recurrence from preoperative MR scans using fractional-anisotropy maps with free-water suppressionMarie-Christin Metz, Miguel Molina-Romero, Jana Lipkova, Jens Gempt, Friederike Liesche-Starnecker, Paul Eichinger, Lioba Grundl, Bjoern Menze, Stephanie E Combs, Claus Zimmer, and othersCancers[ bibtex | html | abstract ]

Predicting glioblastoma recurrence from preoperative MR scans using fractional-anisotropy maps with free-water suppressionMarie-Christin Metz, Miguel Molina-Romero, Jana Lipkova, Jens Gempt, Friederike Liesche-Starnecker, Paul Eichinger, Lioba Grundl, Bjoern Menze, Stephanie E Combs, Claus Zimmer, and othersCancers[ bibtex | html | abstract ]@article{metz2020predicting, title = {Predicting glioblastoma recurrence from preoperative MR scans using fractional-anisotropy maps with free-water suppression}, author = {Metz, Marie-Christin and Molina-Romero, Miguel and Lipkova, Jana and Gempt, Jens and Liesche-Starnecker, Friederike and Eichinger, Paul and Grundl, Lioba and Menze, Bjoern and Combs, Stephanie E and Zimmer, Claus and others}, journal = {Cancers}, volume = {12}, number = {3}, pages = {728}, year = {2020}, publisher = {Multidisciplinary Digital Publishing Institute}, html = {https://www.mdpi.com/669090}, abbr = {metz2020predicting.png} }Diffusion tensor imaging (DTI), and fractional-anisotropy (FA) maps in particular, have shown promise in predicting areas of tumor recurrence in glioblastoma. However, analysis of peritumoral edema, where most recurrences occur, is impeded by free-water contamination. In this study, we evaluated the benefits of a novel, deep-learning-based approach for the free-water correction (FWC) of DTI data for prediction of later recurrence. We investigated 35 glioblastoma cases from our prospective glioma cohort. A preoperative MR image and the first MR scan showing tumor recurrence were semiautomatically segmented into areas of contrast-enhancing tumor, edema, or recurrence of the tumor. The 10th, 50th and 90th percentiles and mean of FA and mean-diffusivity (MD) values (both for the original and FWC–DTI data) were collected for areas with and without recurrence in the peritumoral edema. We found significant differences in the FWC–FA maps between areas of recurrence-free edema and areas with later tumor recurrence, where differences in noncorrected FA maps were less pronounced. Consequently, a generalized mixed-effect model had a significantly higher area under the curve when using FWC–FA maps (AUC = 0.9) compared to noncorrected maps (AUC = 0.77, p < 0.001). This may reflect tumor infiltration that is not visible in conventional imaging, and may therefore reveal important information for personalized treatment decisions.

BraTS Toolkit: translating BraTS brain tumor segmentation algorithms into clinical and scientific practiceFlorian Kofler, Christoph Berger, Diana Waldmannstetter, Jana Lipkova, Ivan Ezhov, Giles Tetteh, Jan Kirschke, Claus Zimmer, Benedikt Wiestler, and Bjoern H MenzeFrontiers in neuroscience[ bibtex | html | abstract ]

BraTS Toolkit: translating BraTS brain tumor segmentation algorithms into clinical and scientific practiceFlorian Kofler, Christoph Berger, Diana Waldmannstetter, Jana Lipkova, Ivan Ezhov, Giles Tetteh, Jan Kirschke, Claus Zimmer, Benedikt Wiestler, and Bjoern H MenzeFrontiers in neuroscience[ bibtex | html | abstract ]@article{kofler2020brats, title = {BraTS Toolkit: translating BraTS brain tumor segmentation algorithms into clinical and scientific practice}, author = {Kofler, Florian and Berger, Christoph and Waldmannstetter, Diana and Lipkova, Jana and Ezhov, Ivan and Tetteh, Giles and Kirschke, Jan and Zimmer, Claus and Wiestler, Benedikt and Menze, Bjoern H}, journal = {Frontiers in neuroscience}, pages = {125}, year = {2020}, publisher = {Frontiers}, html = {https://doi.org/10.3389/fnins.2020.00125}, abbr = {kofler2020brats.png} }Despite great advances in brain tumor segmentation and clear clinical need, translation of state-of-the-art computational methods into clinical routine and scientific practice remains a major challenge. Several factors impede successful implementations, including data standardization and preprocessing. However, these steps are pivotal for the deployment of state-of-the-art image segmentation algorithms. To overcome these issues, we present BraTS Toolkit. BraTS Toolkit is a holistic approach to brain tumor segmentation and consists of three components: First, the BraTS Preprocessor facilitates data standardization and preprocessing for researchers and clinicians alike. It covers the entire image analysis workflow prior to tumor segmentation, from image conversion and registration to brain extraction. Second, BraTS Segmentor enables orchestration of BraTS brain tumor segmentation algorithms for generation of fully-automated segmentations. Finally, Brats Fusionator can combine the resulting candidate segmentations into consensus segmentations using fusion methods such as majority voting and iterative SIMPLE fusion. The capabilities of our tools are illustrated with a practical example to enable easy translation to clinical and scientific practice.

Red-GAN: Attacking class imbalance via conditioned generation. Yet another medical imaging perspective.Ahmad B Qasim, Ivan Ezhov, Suprosanna Shit, Oliver Schoppe, Johannes C Paetzold, Anjany Sekuboyina, Florian Kofler, Jana Lipkova, Hongwei Li, and Bjoern MenzeMedical Imaging with Deep Learning[ bibtex | software | html | abstract ]

Red-GAN: Attacking class imbalance via conditioned generation. Yet another medical imaging perspective.Ahmad B Qasim, Ivan Ezhov, Suprosanna Shit, Oliver Schoppe, Johannes C Paetzold, Anjany Sekuboyina, Florian Kofler, Jana Lipkova, Hongwei Li, and Bjoern MenzeMedical Imaging with Deep Learning[ bibtex | software | html | abstract ]@inproceedings{qasim2020red, title = {Red-GAN: Attacking class imbalance via conditioned generation. Yet another medical imaging perspective.}, author = {Qasim, Ahmad B and Ezhov, Ivan and Shit, Suprosanna and Schoppe, Oliver and Paetzold, Johannes C and Sekuboyina, Anjany and Kofler, Florian and Lipkova, Jana and Li, Hongwei and Menze, Bjoern}, booktitle = {Medical Imaging with Deep Learning}, pages = {655--668}, year = {2020}, organization = {PMLR}, abbr = {qasim2020red.png}, html = {https://proceedings.mlr.press/v121/qasim20a.html}, software = {https://github. com/IvanEz/Red-GAN} }Exploiting learning algorithms under scarce data regimes is a limitation and a reality of the medical imaging field. In an attempt to mitigate the problem, we propose a data augmentation protocol based on generative adversarial networks. We condition the networks at a pixel-level (segmentation mask) and at a global-level information (acquisition environment or lesion type). Such conditioning provides immediate access to the image-label pairs while controlling global class specific appearance of the synthesized images. To stimulate synthesis of the features relevant for the segmentation task, an additional passive player in a form of segmentor is introduced into the the adversarial game. We validate the approach on two medical datasets: BraTS, ISIC. By controlling the class distribution through injection of synthetic images into the training set we achieve control over the accuracy levels of the datasets’ classes.

2019

Personalized radiotherapy design for glioblastoma: Integrating mathematical tumor models, multimodal scans, and bayesian inferenceJana Lipková, Panagiotis Angelikopoulos, Stephen Wu, Esther Alberts, Benedikt Wiestler, Christian Diehl, Christine Preibisch, Thomas Pyka, Stephanie E Combs, Panagiotis Hadjidoukas, and othersIEEE transactions on medical imaging[ bibtex | software | html | abstract ]

Personalized radiotherapy design for glioblastoma: Integrating mathematical tumor models, multimodal scans, and bayesian inferenceJana Lipková, Panagiotis Angelikopoulos, Stephen Wu, Esther Alberts, Benedikt Wiestler, Christian Diehl, Christine Preibisch, Thomas Pyka, Stephanie E Combs, Panagiotis Hadjidoukas, and othersIEEE transactions on medical imaging[ bibtex | software | html | abstract ]@article{lipkova2019personalized, title = {Personalized radiotherapy design for glioblastoma: Integrating mathematical tumor models, multimodal scans, and bayesian inference}, author = {Lipkov{\'a}, Jana and Angelikopoulos, Panagiotis and Wu, Stephen and Alberts, Esther and Wiestler, Benedikt and Diehl, Christian and Preibisch, Christine and Pyka, Thomas and Combs, Stephanie E and Hadjidoukas, Panagiotis and others}, journal = {IEEE transactions on medical imaging}, volume = {38}, number = {8}, pages = {1875--1884}, year = {2019}, publisher = {IEEE}, abbr = {lipkova2019personalized.png}, html = {https://ieeexplore.ieee.org/abstract/document/8654016/}, software = {https://github.com/JanaLipkova/GliomaSolver} }Glioblastoma (GBM) is a highly invasive brain tumor, whose cells infiltrate surrounding normal brain tis- sue beyond the lesion outlines visible in the current medical scans. These infiltrative cells are treated mainly by radio- therapy. Existing radiotherapy plans for brain tumors derive from population studies and scarcely account for patient- specific conditions. Here, we provide a Bayesian machine learning framework for the rational design of improved, personalized radiotherapy plans using mathematical mod- eling and patient multimodal medical scans. Our method, for the first time, integrates complementary information from high-resolution MRI scans and highly specific FET- PET metabolic maps to infer tumor cell density in GBM patients. The Bayesian framework quantifies imaging and modeling uncertainties and predicts patient-specific tumor cell density with credible intervals. The proposed method- ology relies only on data acquired at a single time point and, thus, is applicable to standard clinical settings. An ini- tial clinical population study shows that the radiotherapy plans generated from the inferred tumor cell infiltration maps spare more healthy tissue thereby reducing radiation toxicity while yielding comparable accuracy with standard radiotherapy protocols. Moreover, the inferred regions of high tumor cell densities coincide with the tumor radiore- sistant areas, providing guidance for personalized dose- escalation. The proposed integration of multimodal scans and mathematical modeling provides a robust, non-invasive tool to assist personalized radiotherapy design

Neural parameters estimation for brain tumor growth modelingIvan Ezhov, Jana Lipkova, Suprosanna Shit, Florian Kofler, Nore Collomb, Benjamin Lemasson, Emmanuel Barbier, and Bjoern MenzeInternational Conference on Medical Image Computing and Computer-Assisted Intervention[ bibtex | html | arXiv | abstract ]

Neural parameters estimation for brain tumor growth modelingIvan Ezhov, Jana Lipkova, Suprosanna Shit, Florian Kofler, Nore Collomb, Benjamin Lemasson, Emmanuel Barbier, and Bjoern MenzeInternational Conference on Medical Image Computing and Computer-Assisted Intervention[ bibtex | html | arXiv | abstract ]@inproceedings{ezhov2019neural, title = {Neural parameters estimation for brain tumor growth modeling}, author = {Ezhov, Ivan and Lipkova, Jana and Shit, Suprosanna and Kofler, Florian and Collomb, Nore and Lemasson, Benjamin and Barbier, Emmanuel and Menze, Bjoern}, booktitle = {International Conference on Medical Image Computing and Computer-Assisted Intervention}, pages = {787--795}, year = {2019}, organization = {Springer, Cham}, abbr = {ezhov2019neural.png}, arxiv = {https://arxiv.org/pdf/1907.00973}, html = {https://link.springer.com/chapter/10.1007/978-3-030-32245-8_87} }Understanding the dynamics of brain tumor progression is essential for optimal treatment planning. Cast in a mathematical formu- lation, it is typically viewed as evaluation of a system of partial differen- tial equations, wherein the physiological processes that govern the growth of the tumor are considered. To personalize the model, i.e. find a relevant set of parameters, with respect to the tumor dynamics of a particular patient, the model is informed from empirical data, e.g., medical images obtained from diagnostic modalities, such as magnetic-resonance imag- ing. Existing model-observation coupling schemes require a large number of forward integrations of the biophysical model and rely on simplifying assumption on the functional form, linking output of the model with the image information. In this work, we propose a learning-based tech- nique for the estimation of tumor growth model parameters from medical scans. The technique allows for explicit evaluation of the posterior dis- tribution of the parameters by sequentially training a mixture-density network, relaxing the constraint on the functional form and reducing the number of samples necessary to propagate through the forward model for the estimation. We test the method on synthetic and real scans of rats injected with brain tumors to calibrate the model and to predict tumor progression.

2018

Identifying the best machine learning algorithms for brain tumor segmentationSpyridon Bakas, Mauricio Reyes, András Jakab, Stefan Bauer, Markus Rempfler, Alessandro Crimi, RT Shinohara, Christoph Berger, SM Ha, Martin Rozycki, and othersProgression Assessment, and Overall Survival Prediction in the BRATS Challenge[ bibtex | html | abstract ]

Identifying the best machine learning algorithms for brain tumor segmentationSpyridon Bakas, Mauricio Reyes, András Jakab, Stefan Bauer, Markus Rempfler, Alessandro Crimi, RT Shinohara, Christoph Berger, SM Ha, Martin Rozycki, and othersProgression Assessment, and Overall Survival Prediction in the BRATS Challenge[ bibtex | html | abstract ]@article{bakas2018identifying, title = {Identifying the best machine learning algorithms for brain tumor segmentation}, author = {Bakas, Spyridon and Reyes, Mauricio and Jakab, Andr{\'a}s and Bauer, Stefan and Rempfler, Markus and Crimi, Alessandro and Shinohara, RT and Berger, Christoph and Ha, SM and Rozycki, Martin and others}, journal = {Progression Assessment, and Overall Survival Prediction in the BRATS Challenge}, volume = {10}, year = {2018}, html = {https://arxiv.org/abs/1811.02629}, abbr = {bakas2018identifying.png} }Gliomas are the most common primary brain malignancies, with different degrees of aggressiveness, vari- able prognosis and various heterogeneous histologic sub-regions, i.e., peritumoral edematous/invaded tissue, necrotic core, active and non-enhancing core. This intrinsic heterogeneity is also portrayed in their radio-phenotype, as their sub-regions are depicted by varying intensity profiles disseminated across multi-parametric magnetic resonance imag- ing (mpMRI) scans, reflecting varying biological properties. Their heterogeneous shape, extent, and location are some of the factors that make these tumors difficult to resect, and in some cases inoperable. The amount of resected tumor is a factor also considered in longitudinal scans, when evaluating the apparent tumor for potential diagnosis of pro- gression. Furthermore, there is mounting evidence that accurate segmentation of the various tumor sub-regions can offer the basis for quantitative image analysis towards prediction of patient overall survival. This study assesses the state-of-the-art machine learning (ML) methods used for brain tumor image analysis in mpMRI scans, during the last seven instances of the International Brain Tumor Segmentation (BraTS) challenge, i.e., 2012-2018. Specifically, we focus on i) evaluating segmentations of the various glioma sub-regions in pre-operative mpMRI scans, ii) assessing potential tumor progression by virtue of longitudinal growth of tumor sub-regions, beyond use of the RECIST/RANO criteria, and iii) predicting the overall survival from pre-operative mpMRI scans of patients that underwent gross total resection. Finally, we investigate the challenge of identifying the best ML algorithms for each of these tasks, consider- ing that apart from being diverse on each instance of the challenge, the multi-institutional mpMRI BraTS dataset has also been a continuously evolving/growing dataset.

S-Leaping: An Adaptive, Accelerated Stochastic Simulation Algorithm, Bridging \backslashtau-Leaping and R-LeapingJana Lipkova, Georgios Arampatzis, Philippe Chatelain, Bjoern Menze, and Petros KoumoutsakosBulletin of Mathematical Biology[ bibtex | software | html | arXiv | abstract ]

S-Leaping: An Adaptive, Accelerated Stochastic Simulation Algorithm, Bridging \backslashtau-Leaping and R-LeapingJana Lipkova, Georgios Arampatzis, Philippe Chatelain, Bjoern Menze, and Petros KoumoutsakosBulletin of Mathematical Biology[ bibtex | software | html | arXiv | abstract ]@article{lipkova2018s, title = {S-Leaping: An Adaptive, Accelerated Stochastic Simulation Algorithm, Bridging $\backslash$tau-Leaping and R-Leaping}, author = {Lipkova, Jana and Arampatzis, Georgios and Chatelain, Philippe and Menze, Bjoern and Koumoutsakos, Petros}, journal = {Bulletin of Mathematical Biology}, volume = {80}, number = {459}, year = {2018}, publisher = {Springer Nature}, html = {https://link.springer.com/article/10.1007/s11538-018-0464-9}, software = {https://github.com/JanaLipkova/SSM}, abbr = {lipkova2018s.png}, arxiv = {https://arxiv.org/pdf/1802.00296.pdf} }We propose the S-leaping algorithm for the acceleration of Gillespie’s stochastic sim- ulation algorithm that combines the advantages of the two main accelerated methods; the τ -leaping and R-leaping algorithms. These algorithms are known to be efficient under different conditions; the τ -leaping is efficient for non-stiff systems or systems with partial equilibrium, while the R-leaping performs better in stiff system thanks to an efficient sampling procedure. However, even a small change in a system’s set up can critically affect the nature of the simulated system and thus reduce the efficiency of an accelerated algorithm. The proposed algorithm combines the efficient time step selection from the τ -leaping with the effective sampling procedure from the R-leaping algorithm. The S-leaping is shown to maintain its efficiency under different conditions and in the case of large and stiff systems or systems with fast dynamics, the S-leaping outperforms both methods. We demonstrate the performance and the accuracy of the S-leaping in comparison with the τ -leaping and R-leaping on a number of benchmark systems involving biological reaction networks.

Automated whole-body bone lesion detection for multiple myeloma on 68Ga-pentixafor PET/CT imaging using deep learning methodsLina Xu, Giles Tetteh, Jana Lipkova, Yu Zhao, Hongwei Li, Patrick Christ, Marie Piraud, Andreas Buck, Kuangyu Shi, and Bjoern H MenzeContrast media & molecular imaging[ bibtex | html | abstract ]

Automated whole-body bone lesion detection for multiple myeloma on 68Ga-pentixafor PET/CT imaging using deep learning methodsLina Xu, Giles Tetteh, Jana Lipkova, Yu Zhao, Hongwei Li, Patrick Christ, Marie Piraud, Andreas Buck, Kuangyu Shi, and Bjoern H MenzeContrast media & molecular imaging[ bibtex | html | abstract ]@article{xu2018automated, title = {Automated whole-body bone lesion detection for multiple myeloma on 68Ga-pentixafor PET/CT imaging using deep learning methods}, author = {Xu, Lina and Tetteh, Giles and Lipkova, Jana and Zhao, Yu and Li, Hongwei and Christ, Patrick and Piraud, Marie and Buck, Andreas and Shi, Kuangyu and Menze, Bjoern H}, journal = {Contrast media \& molecular imaging}, volume = {2018}, year = {2018}, publisher = {Hindawi}, abbr = {xu2018automated.png}, html = {https://www.hindawi.com/journals/cmmi/2018/2391925/} }The identification of bone lesions is crucial in the diagnostic assessment of multiple myeloma (MM). 68Ga-Pentixafor PET/CT can capture the abnormal molecular expression of CXCR-4 in addition to anatomical changes. However, whole-body detection of dozens of lesions on hybrid imaging is tedious and error prone. It is even more difficult to identify lesions with a large heterogeneity. This study employed deep learning methods to automatically combine characteristics of PET and CT for whole-body MM bone lesion detection in a 3D manner. Two convolutional neural networks (CNNs), V-Net and W-Net, were adopted to segment and detect the lesions. The feasibility of deep learning for lesion detection on 68Ga-Pentixafor PET/CT was first verified on digital phantoms generated using realistic PET simulation methods. Then the proposed methods were evaluated on real 68Ga-Pentixafor PET/CT scans of MM patients. The preliminary results showed that deep learning method can leverage multimodal information for spatial feature representation, and W-Net obtained the best result for segmentation and lesion detection. It also outperformed traditional machine learning methods such as random forest classifier (RF), 𝑘-Nearest Neighbors (k-NN), and support vector machine (SVM). The proof-of-concept study encourages further development of deep learning approach for MM lesion detection in population study.

2017

Automatic liver and tumor segmentation of CT and MRI volumes using cascaded fully convolutional neural networksPatrick Ferdinand Christ, Florian Ettlinger, Felix Grün, Mohamed Ezzeldin A Elshaera, Jana Lipkova, Sebastian Schlecht, Freba Ahmaddy, Sunil Tatavarty, Marc Bickel, Patrick Bilic, and othersarXiv preprint arXiv:1702.05970[ bibtex | html | abstract ]

Automatic liver and tumor segmentation of CT and MRI volumes using cascaded fully convolutional neural networksPatrick Ferdinand Christ, Florian Ettlinger, Felix Grün, Mohamed Ezzeldin A Elshaera, Jana Lipkova, Sebastian Schlecht, Freba Ahmaddy, Sunil Tatavarty, Marc Bickel, Patrick Bilic, and othersarXiv preprint arXiv:1702.05970[ bibtex | html | abstract ]@article{christ2017automatic, title = {Automatic liver and tumor segmentation of CT and MRI volumes using cascaded fully convolutional neural networks}, author = {Christ, Patrick Ferdinand and Ettlinger, Florian and Gr{\"u}n, Felix and Elshaera, Mohamed Ezzeldin A and Lipkova, Jana and Schlecht, Sebastian and Ahmaddy, Freba and Tatavarty, Sunil and Bickel, Marc and Bilic, Patrick and others}, journal = {arXiv preprint arXiv:1702.05970}, year = {2017}, abbr = {christ2017automatic.png}, html = {https://arxiv.org/abs/1702.05970} }Automatic segmentation of the liver and hepatic lesions is an important step towards deriving quantitative biomarkers for accurate clinical diagnosis and computer-aided decision support systems. This paper presents a method to automatically segment liver and lesions in CT and MRI abdomen images using cascaded fully convolutional neural networks (CFCNs) enabling the segmentation of a large-scale medical trial or quantitative image analysis. We train and cascade two FCNs for a combined segmentation of the liver and its lesions. In the first step, we train a FCN to segment the liver as ROI input for a second FCN. The second FCN solely segments lesions within the predicted liver ROIs of step 1. CFCN models were trained on an abdominal CT dataset comprising 100 hepatic tumor volumes. Validations on further datasets show that CFCN-based semantic liver and lesion segmentation achieves Dice scores over 94% for liver with computation times below 100s per volume. We further experimentally demonstrate the robustness of the proposed method on an 38 MRI liver tumor volumes and the public 3DIRCAD dataset.

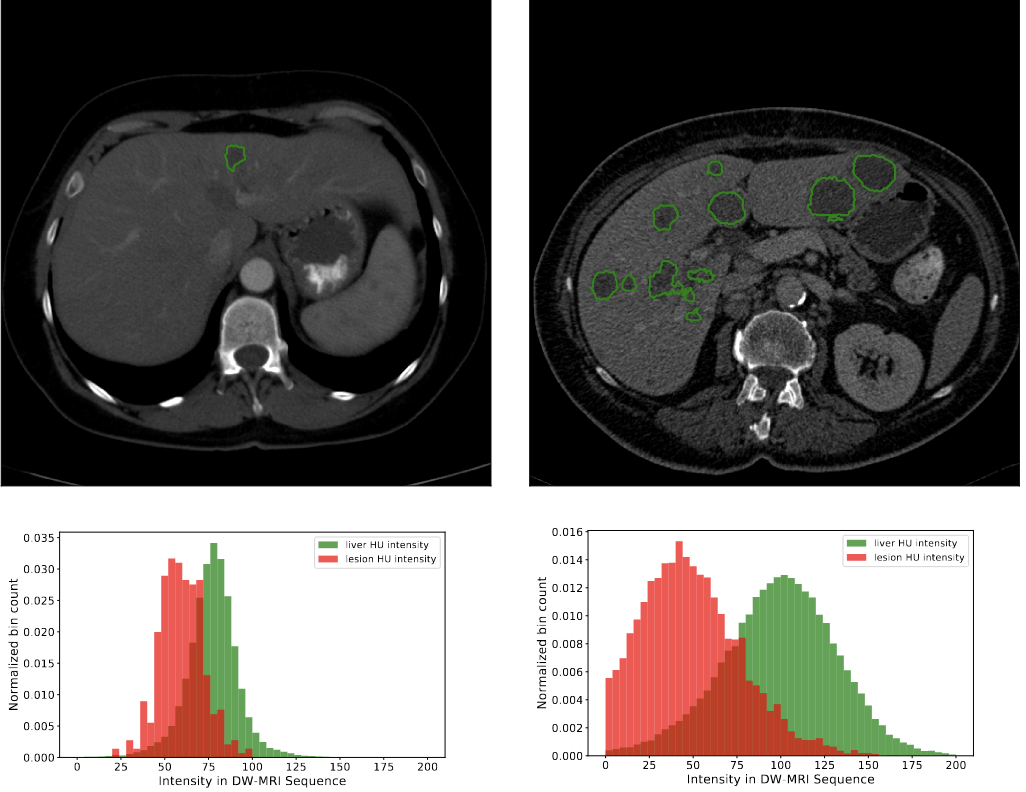

Automated unsupervised segmentation of liver lesions in ct scans via cahn-hilliard phase separationJana Lipková, Markus Rempfler, Patrick Christ, John Lowengrub, and Bjoern H MenzearXiv preprint arXiv:1704.02348[ bibtex | html | abstract ]

Automated unsupervised segmentation of liver lesions in ct scans via cahn-hilliard phase separationJana Lipková, Markus Rempfler, Patrick Christ, John Lowengrub, and Bjoern H MenzearXiv preprint arXiv:1704.02348[ bibtex | html | abstract ]@article{lipkova2017automated, title = {Automated unsupervised segmentation of liver lesions in ct scans via cahn-hilliard phase separation}, author = {Lipkov{\'a}, Jana and Rempfler, Markus and Christ, Patrick and Lowengrub, John and Menze, Bjoern H}, journal = {arXiv preprint arXiv:1704.02348}, year = {2017}, abbr = {lipkova2017automated.png}, html = {https://arxiv.org/pdf/1704.02348.pdf} }The segmentation of liver lesions is crucial for detection, di- agnosis and monitoring progression of liver cancer. However, design of accurate automated methods remains challenging due to high noise in CT scans, low contrast between liver and lesions, as well as large lesion variability. We propose a 3D automatic, unsupervised method for liver le- sions segmentation using a phase separation approach. It is assumed that liver is a mixture of two phases: healthy liver and lesions, represented by different image intensities polluted by noise. The Cahn-Hilliard equation is used to remove the noise and separate the mixture into two distinct phases with well-defined interfaces. This simplifies the lesion detection and segmentation task drastically and enables to segment liver lesions by thresholding the Cahn-Hilliard solution. The method was tested on 3Dircadb and LITS dataset.

2011

Analysis of Brownian dynamics simulations of reversible bimolecular reactionsJana Lipková, Konstantinos C Zygalakis, S Jonathan Chapman, and Radek ErbanSIAM Journal on Applied Mathematics[ bibtex | html | arXiv | abstract ]

Analysis of Brownian dynamics simulations of reversible bimolecular reactionsJana Lipková, Konstantinos C Zygalakis, S Jonathan Chapman, and Radek ErbanSIAM Journal on Applied Mathematics[ bibtex | html | arXiv | abstract ]@article{lipkova2011analysis, title = {Analysis of Brownian dynamics simulations of reversible bimolecular reactions}, author = {Lipkov{\'a}, Jana and Zygalakis, Konstantinos C and Chapman, S Jonathan and Erban, Radek}, journal = {SIAM Journal on Applied Mathematics}, volume = {71}, number = {3}, pages = {714--730}, year = {2011}, publisher = {Society for Industrial and Applied Mathematics}, html = {https://doi.org/10.1137/100794213}, abbr = {lipkova2011analysis.png}, arxiv = https://arxiv.org/pdf/1005.0698.pdf }A class of Brownian dynamics algorithms for stochastic reaction-diffusion models which include reversible bimolecular reactions is presented and analyzed. The method is a generalization of the λ-ρ model for irreversible bimolecular reactions which. The formulae relating the experimentally measurable quantities (reaction rate constants and diffusion constants) with the algorithm parameters are derived. The probability of geminate recombination is also investigated.